|

I am a PhD student in NeuroAI at McGill Computer Science and Mila, supervised by Professors Doina Precup and Paul Masset. I study the interplay between short- and long-term decision-making in the human brain using reinforcement learning and dopaminergic neuron modeling. Previously I worked for two years as a computer vision researcher and 3D reconstruction engineer at Algolux, a self-driving car software startup, and Magicplan, Sensopia Inc., an augmented reality mobile app which maps indoor environments. I did my masters at McGill in Electrical and Computer Engineering , under the supervision of Prof. James J. Clark. I researched visual attention and distraction during visual search tasks, using deep learning and eye-tracking data to predict saliency. I completed my undergraduate in Electrical Engineering at Shahid Beheshti University, focusing on telecommunications and signal processing. Also researched end-to-end lane following in autonomous vehicles using CNNs. I'm passionate about cognitive science, AI, and healthcare applications. Email / LinkedIn / Google Scholar / Twitter / Github |

|

|

15/09/2023 I am joining Mila soon as an AI researcher! 09/08/2022 Moving to Toronto, Ontario on 1st September! 01/08/2021 I am officially graduated from McGill with a Master of Science in Electrical Engineering! 16/08/2021 I am starting as a computer vision researcher at Algolux! |

|

|

|

Master Research, 2021 Manoosh Samiei, James J. Clark GitHub Code / Thesis Our dataset analysis report is available on Arxiv: Code / Paper One publication in Journal of Vision is in progress. ArXiv Pre-print We present two approaches. Our first method uses a two-stream encoder-decoder network to predict fixation density maps of human observers in visual search. Our second method predicts the segmentation of distractor and target objects during search using a Mask-RCNN segmentation network. We use COCO-Search18 dataset to train/finetune and evaluate our models. |

|

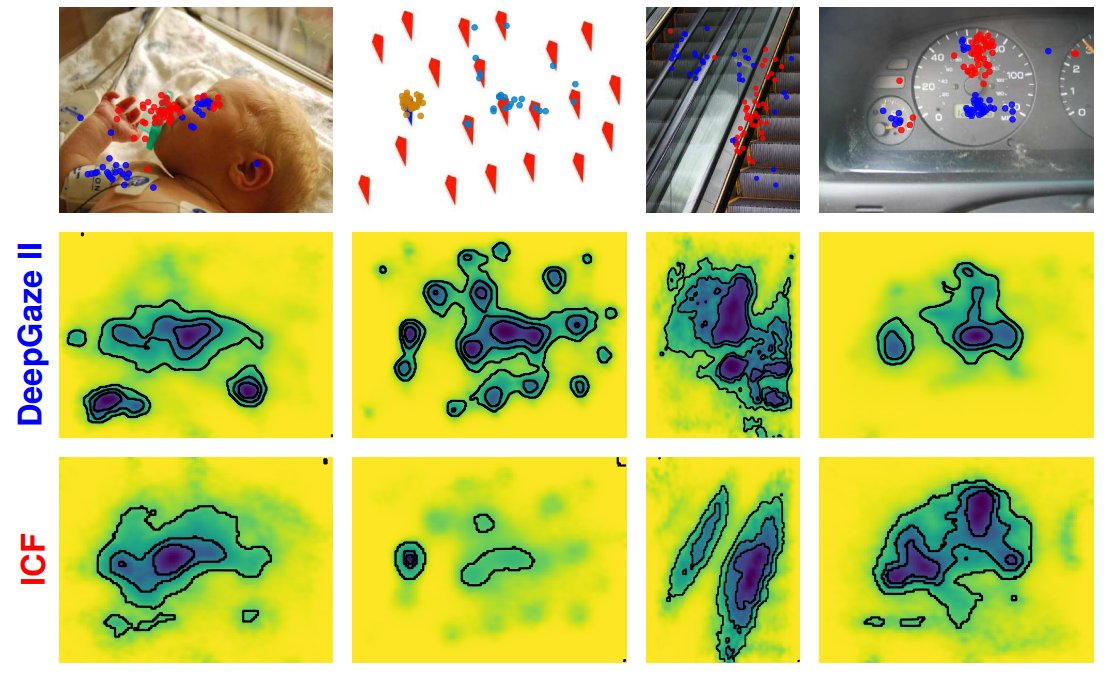

GitHub / Report DeepGaze2 extracts high-level features in images using VGG19 convolutional neural network pretrained for object recognition task. DeepGaze II is trained using a log-likelihood learning framework, and aims to predict where humans look while free-viewing a set of images. |

|

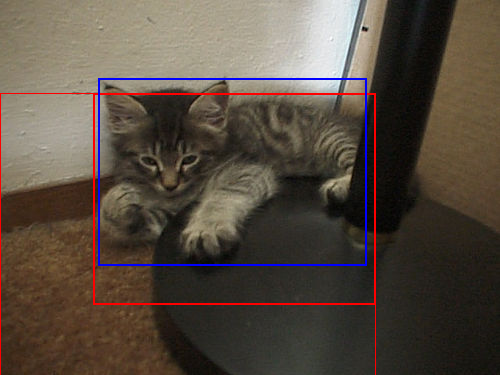

GitHub / Report / video We implmented two papers that formulate object localization as a dynamic Markov decision process based on deep reinforcement learning. We compare two different action settings for this MDP: a hierarchical method and a dynamic method. |

|

NeurIPS 2019 Reproducibility Challenge

Report We replicated the results of the paper “CNN2: Viewpoint Generalization via a Binocular Vision” for two datasets SmallNORB and ModelNet2D. |

|

Thesis in Persian

|

|

|

|

Volunteer in poster sessions in Montreal AI Symposium 2020 , and WiML 2020 Helped with locating posters and technical issues in gather town platform. |

|

Source code and style from Jon Barron's website |